Mastering Apex Programming - A Book Review

My review of the book “Mastering Apex Programming”

Some time in 2023 there was an amazing deal on Humble Bundle for a set of Salesforce related books. 20 books for a minimum payment of $30 (or something about that amount). Whilst I wasn’t really desperate for a whole lot of Salesforce books to read it was too good a deal to pass up so I purchased the “The Salesforce CRM Certification Bundle”. As I did not have any Salesforce Certifications (discounting the Slack Developer Certification I got in 2022) at least a few of the books might be helpful.

The first book I decided to read was not one of the Certification guides but a book titled “Mastering Apex Programming”. I’d consider myself a pretty good Apex programmer but I am mindful I came to Apex from Java (and a whole lot of other languages) and may have brought some bad practices with me. There might also be some interesting language features or edge cases the book might teach me about. I actually finished the book some months back and have been meaning to write a short review ever since.

Overall I think this is an OK book. It’s not amazing but it’s certainly not terrible and offers sound advice and guidance. It places it covers a little more than just Apex programming occasionally expanding to cover client management and interactions. This may be appropriate for some Apex developers (those in consulting roles) but less relevant for those working for ISVs.

The book is in 3 main sections. The first “Triggers, Testing and Security” really covers the bread and butter basics of what Apex is used for. Apex was originally a trigger language so it makes sense to cover this most core of use cases first and use it as a tool to look at the most common mistakes that are made in Apex development such as DML in loops as well as how to debug your code, make it secure and an outline of a trigger framework. This section is pretty good and as far as I am aware still up to date.

However there is one glaring omission in the security section. User Mode SOQL/DML is not covered. This is relatively recent and highlights the issue with using books, which are a point-in-time reference, to try and learn an constantly evolving platform. User Mode SOQL/DML makes a lot of the discussion of CRUD and FLS checks unnecessary and really simplifies an Apex developers life.

Query with binds which is even more recent is also not mentioned in the preventing SOQL injection attacks section. Again this should be the go-to for developers and not having it in the book makes it feel dated.

The second section is on Asynchronous Apex and Apex REST. Quite why these two are lumped together is not entirely clear. Perhaps it’s because one common use case of async Apex is making callouts so it made sense to cover the other side of that coin. This section feels old. Really it should recommend that all new async code is written using Queueables and discuss a Queueable framework in the same way that it discusses a Trigger framework. Queueables are now the clear answer to almost all async programming on the platform (and once serializable Query Cursors are released the last reasons to use Batch Apex will go away).

The book is missing and discussion of Queueable Finalizers. This is unforgivable. Finalizers are what make Queueables the best option for async. Without them it is impossible to write truly predictable async code that can handle failure gracefully. I think this is the biggest issue with the whole book. There is no way you have “mastered” Apex Programming without knowing about these.

Similarly to the missing items above it also does not mention AsyncOptions. These are only a few released old so this is not surprising. However these really make async with Queueables so much better.

The final section is on Apex performance. I had no real issues with this. I guess performance profiling and improving performance have not changed much for a number of years. It would have been nice to mention the Certinia Log Analyser which can make performance analysis so much easier. And there was no mention of cold start problems and how to avoid them which was disappointing.

So all in all an OK book that is starting to show it’s age. No real errors, just missing things of various levels of importance.

Most likely my next book review from this bundle will be the “Salesforce Platform App Builder Certification Guide” as I intend writing this exam soon.

How Super is Super

What are the differences between older and newer Superbadges? Are all Superbadges equal? Or are some a little less super?

As I continue to rack up the points and badges in a completely pointless quest to move up the Superqbit leaderboard I’ve been on a bit of a Superbadge rip recently. I’ve not quite completed every available Superbadge on Trailhead yet but that is at least in sight. As I’ve been working my way through them I’ve noticed a few things

Modern Superbadges vs Original

You can easily and immediately tell the difference between the original style of Superbadge and the modern once. One glance at the number of points for completion of the badge and you’ll know. Old school Superbadges will be 8000+ points. Some of them range up as high as 16000 points. For one badge! Modern superbadges break this down into a series of smaller Superbadge units. Each of these will be 1500-2500 points and each counts as a Superbadge on its own.

This has somewhat devalued the Superbadge. In the past each Superbadge was a considerable investment of time and effort. Perhaps 10-20 hours. It really meant something to get one. For me this meant spending a weeks worth of evenings to get one. With the new ones I can generally bang out a Superbadge unit a night. On the third night I can probably also do the “capstone assessment” to get the overall Superbadge. As you can see this means that, at least for me, the total badge has got easier. As can be seen from the points when are now consistently down in the 9000-10000 range rather than the 16000.

However by making them a bit more bite sized (although 1-2 hours once you’ve completed all the prerequisites is still a bit of time) it makes them much more approachable. I am far more likely to take on a Superbadge that I can complete in one night that one of the old monsters that takes a bit more effort to build up to. A good example would be the Lightning Web Components Specialist Superbadge. This is one of the handful I have left to do. It’s one I can do (both technically and I’ve completed all the prerequisites), It’s in an area I am interested in, But it’s 16000 points. It’s massive. So it’s still yo be done. Were this 4 separate Superbadge units I’d have done them by now

Not all Badges are Equal

The Apex Specialist badge is 13000 points and takes some time. The Advanced Apex Specialist badge is 16000 points and even harder. The Selling with Sales Cloud Specialist is 7000 points and is 90% data entry. It can be completed in a couple of hours. This disparity in complexity is somewhat disheartening (but does make for some very cheap points if you are levelling up).

Supersets?

Why are there only 4 Super Sets? And why is one this a Billing Super Set? Is Billing really a quarter of Trailhead?

Where is the Flow Super Set? There are probably more Flow Superbadges than any other subject.

It feels like Super Sets are a concept that was launched and then somewhat abandoned.

What’s Missing

The most obvious Superbadge or badges that I’d like to see added are in the AI area. Maybe we’ll see these in 2024

Salesforce Certified Associate

My thoughts on the Salesforce Certified Associate exam

This was the first of the lower-tier Associate certifications that was introduced earlier this year. I sat (and passed) this exam a couple of weeks back. Here are my thoughts (as well as some issues I had sitting this exam)

First of all this is a pretty easy exam. Like the Certified AI Associate exam it took my about 10 minutes once I was able to actually connect (more below). This is an entry level certification designed for those with about 6 months of Salesforce usage experience. Note this is usage - developers may not actually gain that much usage experience!

This exam is definitely more on the admin path with many of the questions being on areas of the platform I have never used as a developer. Luckily I had prepared for this exam and have been on a Superbadge kick recently which has ensure I’ve been using a lot fo the platform.

I am a little annoyed to have only got 50% on the Reports & Dashboards as I’d completed the Lightning Experience Reports & Dashboards Specialist Superbadge a few days before I sat this exam. So I should have done better than 50% there! If I ever decide to sit the Salesforce Certified Administrator exam clearly I need to study that area more.

Would I recommend this certification? It’s a cheap and easy way to get on the certification ladder. It helps ensure you actually do know something about using Salesforce which might be an eye opener for some developers! So yes, I’d probably recommend it.

Stay Calm!

I had some real troubles accessing the exam which I did not have with the previous exams I’ve sat. Recently when working from home certain internal sites have been incredibly slow to load. Unfortunately the Kryterion site seemed to be the same. Waiting 2-3 minutes for pages to load as the time ticks past being 10 minutes before the scheduled start to 10 minutes after is stressful! Thankfully I was able to take a few deep breaths once connected and answer the questions

Year of Certification

If this year was my “All Star Ranger” year I want next year to be my Certification year. I’ve got 3 certifications right now. I want to pass at least 4 more next year. We’ll see how that goes and I’ll be sure to post some thoughts on each exam as I sit them

Salesforce Certified AI Associate

Thoughts on the Salesforce AI Associate Certification

Salesforce introduced this new certification a month or so ago. I sat (and passed) this today so thought I’d write a short entry about it.

First off this is an associate level certification. This means it is not as in-depth as the main certifications and as such I think the exams are easier. I’d say this certification mans the holder has a base level understanding of the topics but it does not test any real depth. Certainly this is not a technical certification.

In the current AI climate having some certification in this area is probably a good thing so it’s definitely worth doing. If you’ve been keeping up with the various AI trailheads (or just do the prep trailmix) you’ll be good to sit the exam. This is not one where you need to do any additional study or self-learning.

The exam itself consists of 40 multiple choice questions and the passing grade is set at a reasonably generous 65%. As you can see from the transcript I was well above that. You get a really generous 70 minutes to complete the exam. I did not feel I was rushing and.I took 10! The questions are akin to Trailhead module quiz questions.

This is a pretty cheap exam to take. If you are lucky enough that your employer will pay for it so much the better! I’d say pretty much everyone working on the platform might benefit from doing this one

Salesforce Winter’24 - Queueable Deduplication

Why the new Queueable deduping is not the killer feature it might initially appear to be

Given my previous blog posts about the lack of Mutexes in Apex and the problems this causes the introduction of Queueable deduplication would seem to solve for some of my use cases. I’ll explore the new feature and how it might be used to solve our queueing use case. Tl;dr - this new feature does not remove the need for Mutexes and I can’t actually see where it can be safely used without Mutexes or locks of some sort

In the Winter ‘24 release notes there is an entry for Ensure that Duplicate Queueable Jobs Aren’t Enqueued. This describes a new mechanism for adding a signature to a Queueable. The platform will ensure that there is only one Queueable with the same signature running at once. Any attempt to enqueue a second Queueable with the same signature throws a catchable exception. Thread safe, multi-user safe, multi app-server safe. Pretty amazing and clearly using the sort of Mutexes we might want for our own code behind the scenes.

However this is not actually a good enough primitive to build on. If we start with our queue example. We want to be able to process a queue of items asynchronously. The queue is represented by sObject records but that is not too important here. The semantics we expect are that we enqueue items. If there is not a process running to process the queue we start one. Otherwise we do not. At the end of the Queueable that processes the items we check for more items to process and if there are more we enqueue another Queueable. We need some for of locking to prevent race conditions around the enqueue and check for more items to process steps.

If we use the new Queueable method it might seem that we can use this to ensure that there is only one Queueable running. But there is still a nasty race condition around the enqueue and check steps. If our Queueable is suspended after it has checked for more items to process but before the Queueable actually ends and another process can enqueue in this period we have a problem (no new Queueable will be started or chained so these items will sit unprocessed). So even with this is nice new API we still need our own locks.

But this is a more general problem. Even without the queueing we can consider the general use case. When we are enqueueing to perform some work we are going to be doing that in response to some sort of event. If another event happens whilst the queueable is still running perhaps we don’t want to run two processes in parallel (perhaps they will overwrite each other or cause locking). But we still want the second event to be processed: there is no guarantee that the already running Queueable will pick up changes from our new event. So we need a queue if we are going to use this new method for just about anything. Which means we need Mutexes.

So I have to conclude this new API is not much use on it’s own and if you implement your own locks you don’t need it.

Road To All Star Ranger

A lookback at almost 3 years of gaining Trailhead badges

As of today I’m an All Star Ranger on Trailhead. When I first started on Trailhead this rank did not even exist! At that time Ranger seemed like a challenging goal. All Star Ranger requires 6 times the number of points and badges. I think if that had been the top rank I’d have believed it was impossible. And yet here I am less than 3 years later. I thought I’d have a small look back at how I go here.

I was interviewing for a post with FinancialForce in late 2020. During the process I was told about Trailhead. Once I accepted the offer in December to start in January 2021 I knew I had to skill up. I knew nothing about Salesforce and my Java knowledge would only take me so far!

I earned my first badge on 17th December 2020.. By the time I started on the 4th of January I had 29 badges and a good basic grounding in Salesforce and Apex. A lot of my initial training was in Trailhead so I kept racking up badges.

For a lot of that first year I was doing pretty well at the development side of my job but me platform knowledge was something I felt was lacking. As I moved into Architecture I needed to keep widening my knowledge so I’ve kept on the trail.

600 badges may seem like a huge number (although in the context of Trailhead it’s not really all that many). But over the 1003 days it’s taken to get to All Star Ranger it’s about 1 badge ever 1.5 days. So all it takes is consistency really. I would say that as I’ve ranked up the points have become less of a challenge. It’s all about the badges.

Now that I’ve reached the top rank what’s next. Well the obvious answer is to start gaining certifications. But there is always the SuperQBit Leaderboard!

Data Cloud DX (September 2023)

What is Data Cloud like for a Developer in September 2023?

As I said in my Dreamforce 2023 post I’ve been working on Data Cloud producing a demo. This has been my first real “in anger” exposure to Data Cloud (I’ve been on a couple of Data Cloud workshops previously).. So how has Data Cloud been to develop on? Read on to find out.

Before I describe the DX a (very) short overview of Data Cloud might be in order. This is still pretty new to the Salesforce ecosystem and most developers will not yet have played with it. Data Cloud is a Data Lake/Data Warehouse product (Salesforce like the term Data Lakehouse) that enables the ingestion of data from multiple sources on and off platform, the harmonisation of this data and actions to be taken based on insights pulled from the data. All at masive scale (trillions of records) and near-real-time performance.

Raw data ingested from any source will be stored in a Data Lake Object (DLO). These can be mapped to Data Model Objects (multiple DLOs can map to one DMO) and Data Transforms can manipulate the DLOs to map in additional fields, aggregate, filter etc. DLOs represent the Data Lake. DMOs are more like a Data Warehouse layer that is the business facing model of the data. Calculated Insights can run on the DMOs to produce windowed insights with dimensions and measures. Data Actions allow integration back to Salesforce orgs posting Platfrom Events whenever a DMO or CI records is written.

Now that we understand Data Cloud at a very high level how is it for a developer? Well the first thing to note is that its currently pretty hard for your average developer to get a Data Cloud enabled org. Certainly, as of today, you can’t just spin up a scratch org with a feature enabled.

The second general thing to note is that for iterative development Data Cloud is not great. Developers are used to writing a bit of code, pusing it to an org, trying it out, changing the code and repeating this loop multiple times. In a normal Salesforce org this works reasonably well (although could be faster here too). In Data Cloud this cycle is slow. Really slow. There is no real way to do something right now. Pretty much everything is actually scheduled and run in batches. So when you click a “Refresh Now” button what you are really saying is “schedule this for a Refresh soon”. So if you need to ingest some data, run a data transform, then a Calculated Insight you could be waiting around for half an hour.

This is all made worse by the lack of dependency based scheduling. There is no way of saying “when any of these data streams are completed run this data transform” for instance. Most processes can be scheduled to run at specific times. Which might suffice for production (although I have my doubts) but in development this means you start so,e ingests, refresh the screen until done, then run the transform, refresh that screen until done etc. This is a very frustrating way to work!

In the next post I’ll talk about the specific frustrations with the Data Transform editor/process…

Dreamforce 2023

Read about the two demos I’ve been working on from Dreamforce this year

Dreamforce is fast approaching. As much as I’d love to be there I won’t be. Not this year. Maybe next year. But some things I have been working on will be. In future posts I’ll write a bit more about them, and maybe I’ll be able to share some videos. For now I’ll just say a little about the demos I’ve been working on building and where you can see them if you are lucky enough to be at Dreamforce

The first demo I’ve been working on is a Data Cloud demo. This demo showcases how our CS Cloud product can work with Data Cloud ingesting data from multiple sources that can be on platform or off, work with that data at scale and then use that data to drive intelligent action within our product running on platform. This demo also showcases our ability to use Data Cloud to drive AI models to predict future states.

My part of this demo is the Data Cloud part. It’s been fun working in a novel environment although it’s fair to say that Data Cloud still has some rough edges from a developer experience point of view. I’ll write about the various parts of building a DC solution in future

The second demo is part of a very small closed pilot group that we as a company and I personally have been lucky enough to be part of. This is a demo of how we could incorporate Einstein GPT into our products. Again we are using CS Cloud as our demo product. The demo shows how we can use EGPT to summarise text coming from records inside or org to drive generation of application specific objects in a a number of ways.

This demo is very exciting and shows how easy it is to incorporate GPT into your apps using the Salesforce APIs. And this is with some very early APIs that will be totally re-built for wider usage after Dreamforce. Even with these early APIs I was able to achieve good results in a very short period.

You will be able to see the EGPT demo at the App Exchange landing in Dreamforce. Our Data Cloud demo will be presented twice on Thursday at 1:30 and again at 2:00. I hope you can catch our demos and see the exciting work we’ve done with new parts of the platofrm

Flow Bulkification and Apex

The ability to call Flow in bulk from Apex is a much needed enhancement that is still not implemented on the platform

For the past few weeks I’ve been working on a side project at work which involves working with Flow from Apex. I’ve learned a few interesting things that I might blog about in future but today I want to write about one annoyance, that I was aware of before, but which has now come to the fore. There is an Idea to upvote here.

There is also this more limited Idea that requests a new type of Flow specifically to allow bulkfied offload to Flow from Apex triggers. This is too limited as we will see below.

My Apex code is calling a Flow for every record that it is processing. Normally programmers on the Salesforce platform want to bulkfiy everything. We’d not like to write code like this

List<SObject> records = …;

for (SObject record: records) {

doSomethingWithARecord(record);

}Especially if do something involves any more SOQL or DML. This sort of thing will almost certainly scale poorly and result in governor limits exceptions. We’d like to do something like

List<SObject> records = …;

doSomethingWithRecords(records);Where we do any DML or SOQL once acting on all the records together. This will be much more efficient as each time we make a call to the database the app server our code is running on has to talk over the network to the database server which will take some time to respond. Much better to do that once rather than 200 times!

However when calling out to Flow this is unfortunately impossible. We have the createInterview method or, if the API name of the Flow is know at build time, the direct Flow.Interview.<FlowName> method. In each case these methods create a single Flow Interview instance which takes a single set of input variables. We cannot create, or start these interviews in bulk.

We can still loop and create and start the Flow Interviews singly but as above this might be inefficient, especially if the Flow is doing SOQL or DML operations. Another option would be to pass the Flow a list of records instead of a single record and have the Flow loop. If the Flow is well constructed this could be pretty efficient but anything in the loop in the Flow won’t be bulkfiied. The Flow designer will have to take care to use collection variables to build lists to operate on in bulk, and many won’t.

However Flow does support cross interview bulkfiication. When this is triggered, for example when multiple interviews are started for a record triggered flow, actions in the interviews are actually run as a single action across all the Interview instances allow it to act in bulk. This allows the Flow author to design natural single record Flows without worrying about bulkfiication. Most Flow authors thing and build this way.

In an ideal world we could access this from Apex. We should be able to create/start multiple interviews in parallel and have them bulkfiied by the platform. Please vote for the Idea so as this might happen.

Implementing Mutexes using SObjects

In the previous posts I explored the need for platform-level Mutexes and what adding these might mean for the platform. In this post I’ll start exploring some of the complexity of implementing Mutexes using the available tools on the platform (basically SObjects). I’ll start with a naive implementation, identify some problems and then try and find some solutions.

Before I get started what sort of use case am I trying to solve. Let’s imagine we have a workload that can be broken down into a series of tasks or jobs that have to be processed in a FIFO manner system wide. That is regardless of which user we want a single unified queue that is processed single threaded. This might be the case where we are syncing to an external platform for example.

In order to ensure these operations we will expose an enqueue API that looks something like this:

public interface Queue {

void enqueue(Callable task);

}When enqueue is called the task is written to the queue (serialised to an SObject). Only one “thread” can access (read or write) the queue at a time so internally in enqueue we would need a Mutex. If there is Queueable processing the Queue then nothing else is done in enqueue. If there is not a Queueable then one is started. So another Mutex may well be required here.

Our Mutex API would look like this:

public class Mutexes {

static boolean lock(String name) {}

static void unlock(String name) {}

static void maintain(String name) {}

}As we require Mutexes to persist across contexts (and be lockable between contexts) the most obvious option is to use SObjects. Assume we create a Mutex SObject. We can use the standard Name field to represent the name of the lock. We will need a Checkbox to represent the status of the lock. And we will need a Datetime to store the last update time on the lock.

Given this we might assume the lock method could look like this:

static boolean lock(String name) {

Mutex__c m = MutexSelector.selectByNameForUpdate(name); // Assume we have a selector class

if (m == null) {

m = new Mutex__c(

Name = name,

Status__c = ‘Locked’,

Last_Updated__c = System.now()

);

insert m;

return true;

}

if (m.Status__c == ‘Locked’) {

return false;

}

m.Status__c = ‘Locked’;

m.Last_Updated__c = System.now();

update m;

return true;

}Unfortunately this has some issues. One issue is that if two threads both call lock at the same time when there is no existing lock two new Mutex__c objects will be created when there should only be one. There are various options to solve this. One would be to have a custom field for Name that is unique. We could try/catch around the insert and return false if we failed to insert.

The next issue is that it is possible for a lock to be locked and never unlocked. We only expect locks to be held for as long as a context takes to run. In the case of chains of Queueables we have the maintain method to allow the lock to be maintained for longer. But if a context hits a limits exception and does not unlock then no other process will ever be able to lock the lock

The way round this is to allow locking of locked Mutexes if the Last_Updated__c is old enough (this amount of time could be configured). This will prevent locks being held for and processes stalling.

unlock and maintain are relatively simple methods that will similarly use for update to hold locks for the duration of their transactions.

This whole thing feels like a fairly fragile implementation that would be far better served at a platform level

SObject Datetime Precision and Testing

When does saving and reading an SObject result in a different value? How can we cope with this in tests?

This week I hit one of those little oddities in Apex that I don’t think I’ve seen mentioned before. Most likely this won’t impact you in your day-to-day usage of the platform. I would not have even noticed apart from my failing tests. So what did I encounter?

We like to think that when we save an sObject to the database then read it back we get the same data that was saved. And in general we do. But it appears that with Datetimes this is not necessarily the case. Broadly speaking we get the same value back. Certainly at the level of precision that most people work at. But actually we lose precision when we save.

Before I go further I only noticed because my test was actually writing to the database and reading the record back. This is not best practice. I was only doing this because I had not implemented any form of database wrapper to allow me to mock out the database from my tests.

I was testing a method that updates an SObject setting a Datetime field to the current date and time. My test structure was something like this

@IsTest

private static void runTest() {

// Given

MyObject__c obj = new MyObject__c();

insert obj;

Id objId = obj.Id;

Datetime beforeTest = System.now();

// When

myMethod(objId);

// Then

Datetime afterTest = System.now();

MyObject__c afterObject = [Select DateField__c from MyObject__c where Id=:objId];

System.assert(afterObject.DateField__c >= beforeTest && afterObject.DateField__c <= beforeTest);

}

To my surprise this failed. Doing some debugging the date field was being set and a System.Debug printed out all thee datetimes as being the same. Not too surprising. I would only expect them to vary by a few milliseconds. So using the getTime() method to get the number of milliseconds since the start of the Unix epoch I again debugged.

beforeTest and afterTest were as expected. However the date field was before beforeTest! How is this possible. Well I ran the test a few times before I noticed the pattern. The date field value always ended in 000. So we’d have something like (numbers shortened for brevity):

beforeTest: 198818123

dateField: 198818000

afterTest: 198818456

So saving the DateTime was losing the hundreds of millisecond precision of datetime. In this case storing a value into an SObject and reading it back will result in a different value!

Anyway this is pretty easy to fix in a test by similarly losing the precision from our before and after times as below

@IsTest

private static void runTest() {

// Given

MyObject__c obj = new MyObject__c();

insert obj;

Id objId = obj.Id;

Long beforeTest = (System.now().getTime()/1000)*1000;

// When

myMethod(objId);

// Then

Long afterTest = (System.now().getTime()/1000)*1000;

MyObject__c afterObject = [Select DateField__c from MyObject__c where Id=:objId];

Long testValue = afterObject.DateField__c.getTime();

System.assert(testValue >= beforeTest && testValue <= beforeTest);

}

Apex Syntactic Sugar Enabled by Mutexes

What improvements might we see in Apex if Mutexes were implemented? Explore some of the possibilities in this post

In the last post we looked at the possible API for a Mutex object and how that could be used to protect access to a queue/executor model. This required a lot of boilerplate code. What if the language/compiler were enhanced to take advantage of these Mutex objects simplifying developers life.

On Java there is the concept of synchronised methods (and blocks). What might this look like on Apex? In Java synchronised is a keyword and is used on methods like this

public synchronised void myMethod() {

}This means that only one thread can be executing this method at a time. Access to this method is synchronised by a Mutex without any further work by the developer. This is roughly analogous to

public void myMethod() {

Mutex m = new Mutex(‘myMethod’);

m.lock();

…

m.unlock();

}It’s easy to imagine the exact same syntax and semantics being ported to Apex. However lessons from the Java world should inform any Apex implementation. Many times in Java this is too inflexible. A couple of improvements we might want:

Ability to synchronise across multiple methods (to share the same mutex across multiple methods)

Some flexibility in the mutex to still control parallelism but allow multiple unrelated executions to run

The use case for the first is pretty clear. We might have multiple methods that all access the same protected resource. Access to any of these methods needs to be synchronised behind the same Mutex

If we change the synchronised directive from a modifier to an annotation we could allow for this. And better maintain “normal” Apex code compatibility.

@synchronised(name=“queue”)

public void method1() {}

@synchronised(name=“queue”)

public void method2() {}Here we have two methods that share the same Mutex so only one thread can execute either of these methods at any points in time.

This would expand to something like this in the compiler

public void method1() {

Mutex m = new Mutex(‘queue’);

m.lock();

…

m.unlock();

}

public void method2() {

Mutex m = new Mutex(‘queue’);

m.lock();

…

m.unlock();

}But what if there were some circumstances where unrelated items could run in parallel. Say we have different regions that don’t interact with each other. Well we could allow variable interpolation in the Mutex naming to use a method argument like this

@synchronised(name=“queue-${region}”)

public void method1(String region) {}This would expand to

public void method1(String region) {

Mutex m = new Mutex(String.format(‘queue-$0’, new List<Object>{region}));

m.lock();

…

m.unlock();

}This would allow access to different regions in parallel but synchronise access to each region

This same annotation could also be allowed on anonymous blocks of code

Hopefully this gives a flavour of the what Salesforce could choose to enable to boost developer productivity if the initial investment in Mutexes was made.

Testing Queueable Finalizers Beyond The Limits

What happens when you try to test Finalizers beyond the limits? Well it all goes wrong! Come and find out how

Finalizers on Queueables are fantastic. Finally we can catch the uncatchable. Even if your Queueable hits the governor limits and is terminated your Finalizer will still get run and can do something to handle the exception. A reasonably likely scenario is that the failed Queueable will be re-enqueued with some change to its scope so as it will complete.

An example Queueable is shown below

public with sharing class TestQueueable implements Queueable, Finalizer {

private Callable function;

private Integer scope;

public TestQueueable(

Callable function,

Integer scope

) {

this.function = function;

this.scope = scope;

}

public execute(QueueableContext ctx) {

System.attachFinalizer(this);

Map<String, Object> params = new Map<String,Object> {

‘scope’ => scope

};

function.call(‘action’,params);

}

public execute(FinalizerContext ctx) {

if (null != ctx.getException()) {

System.enqueueJob(

new TestQueueable(function, scope/2)

);

}

}

}As you can see this is about the most basic implementation of this pattern possible. As a simple form of dependency injection we use a Callable that will take a scope parameter which will control the amount of work done. If the callable throws we just retry with half the scope.

Like any good developer we now need to test this. A naive test might look something like this

@IsTest

private class TestQueueableTest {

@IsTest

private static void execute_whenNoException_doesNotReenqueue() {

Test.startTest();

System.enqueueJob(

new TestQueueable(new NoThrowCallable(),200);

);

Test.stopTest();

// Verify no re-enqueue

}

private NoThrowCallable implements Callable {

private call(action, args) {

}

}

}

This will test the happy path. In the real world we might want to have a mockable wrapper round System.enqueueJob to make this testing easier (and ot enqueue with no delay and appropriate depth control for initial enqueue). This would make the verification simple (with ApexMocks verify). I’ll consider that out of scope for this post as it does not impact the point.

But what about testing re-enqueue? Well that’s simple right - just throw an exception!

@IsTest

private class TestQueueableTest {

@IsTest

private static void execute_whenNoException_doesNotReenqueue() {

Test.startTest();

System.enqueueJob(

new TestQueueable(new ThrowCallable(),200);

);

Test.stopTest();

// Verifyre-enqueue

}

private ThrowCallable implements Callable {

private call(action, args) {

throw new MockException()

}

}

private MockException extends Exception {}

}

This won’t work. The exception will bubble to the top and be re-thrown at stopTest and the test will fail. Oh no! Ah, well that’s not a problem is it? We can catch exceptions like this

private class TestQueueableTest {

@IsTest

private static void execute_whenNoException_doesNotReenqueue() {

try {

Test.startTest();

System.enqueueJob(

new TestQueueable(new ThrowCallable(),200);

);

Test.stopTest();

} catch (Exception e) {

}

// Verifyre-enqueue

}

private ThrowCallable implements Callable {

private call(action, args) {

throw new MockException()

}

}

private MockException extends Exception {}

}

Now the test will complete and the verification section can run. Note that even without the try/catch in the test the Finalizer would run. We can verify this with debug logs. But what if we only want to re-enqueue on limits exceptions. That makes sense as we are reducing the scope to handle limits. Let’s say we altered our Queueable to look like this

public with sharing class TestQueueable implements Queueable, Finalizer {

private Callable function;

private Integer scope;

public TestQueueable(

Callable function,

Integer scope

) {

this.function = function;

this.scope = scope;

}

public execute(QueueableContext ctx) {

System.attachFinalizer(this);

Map<String, Object> params = new Map<String,Object> {

‘scope’ => scope

};

try {

function.call(‘action’,params);

} catch (Exception e) {

// Do something

}

}

public execute(FinalizerContext ctx) {

if (null != ctx.getException()) {

System.enqueueJob(

new TestQueueable(function, scope/2)

);

}

}

}Now any catchable Exception will be caught and the Finalizer won’t see the exception. But a limits exception cannot be caught and will cause re-enqueue. Great! But our previous test won’t work. We are throwing a catchable Exception. Let’s try again

private class TestQueueableTest {

@IsTest

private static void execute_whenNoException_doesNotReenqueue() {

try {

Test.startTest();

System.enqueueJob(

new TestQueueable(new ThrowCallable(),300);

);

Test.stopTest();

} catch (Exception e) {

}

// Verifyre-enqueue

}

private ThrowCallable implements Callable {

private call(action, args) {

Integer scope = args.get(‘scope’);

for (Integer i = 0;i<scope;i++) {

List<Contact> contacts = [Select id from Contact Limit 1];

}

}

}

}So now if scope is large enough we’ll get a limits exception for too many SOQL queries. If it’s small enough we won’t. But this test can never succeed. The Limits exception will be re-thrown at Test.stopTest and cannot be caught. So the test will fail.

This means it is impossible to test finalizers with limit exceptions. Unless you know how! If so please get in touch 🙂

Theoretic Mutex API and Use

What might a Mutex API in Salesforce look like? How could it be used? Indulge my imagination as I look at what this might look like

In the last post I outlined the need for Mutexes for Apex. But what might this look like if implemented? In this post I’ll set out a possible API and then show how it could be used.

Before we go any further I should note this is a thought exercise. I have no knowledge of any Mutex API coming. I have no reason to believe this specific API is going to be implemented. If Salesforce happen to add Mutexes to Apex and it happens to look anything like this then I just got lucky!

System.Mutex {

// Creates an instance of Mutex. Every call to the constructor with the same name returns the same object

Mutex(String name);

// Returns true if the named Mutex is already locked

boolean isLocked();

// Attempts to lock the Mutex. If the Mutex is unlocked locks and returns true. If the Mutex is locked waits at most timeout milliseconds for it to unlock. Throws an Exception if the call has to wait longer than timeout milliseconds.

// If locked and has been locked for a long time (platform defined) without extendLock will allow locking even though locked (to allow for limits exceptions causing unlock to fail)

void lock(Long timeout);

// Extends a lock preventing timeout allowing locking of a locked lock

void extendLock();

// Release a lock

void unlock()

}There are a few interesting things to note about this API. Mutexes are named. Only one Mutex with the same name can exist.

Attempting to lock a lock has some interesting behaviour. A timeout is passed to the lock method. If the lock cannot be locked in this time period then an exception is thrown. This may be unnecessary - the platform could enforce this instead. In fact it might have to depending on what conditions are placed around the lock method. If DML is allowed before the lock method is called then the timeout would have to be very short or possibly zero. Most likely DML would not be allowed before calling lock and then a timeout can be allowed without causing transaction locking issues.

However due to the nature of the platform there would still be concerns around locks getting locked and never unlocked. If a transaction locks a lock, does some DML then unlocks the lock all is good - a sensitive resource is guarded from concurrent access by a lock. But what if the transaction hits a governor limit and gets terminated? The lock would remain locked. For normal DML we’d expect that the transaction rolls back (or actually was never committed to the database). Locks would have to operate differently. They would have to actually lock in a persistent fashion whenever lock is called.

To protect against locks staying locked some timeout on the length of locks is also required. The system can manage this behind the scenes. However long running processes in chains of Queueables would have to be able to keep a lock locked. Hence the extendLock method.

The isLocked method would have to obey these rules too. Or a canLock method would have to be provided which could result in isLocked and canLock both returning true. For this we’ll assume isLocked returns false if the lock times out without being extended or unlocked.

Now that we have a minimal API that takes the platform foibles into account what could we do with it? Let’s imagine a not too uncommon scenario. We have some sort of action that we want to perform in a single threaded manner. The exact operation is not important. Even if multiple users at the same time are enqueuing actions the actions have to be performed from a single threaded queue in a FIFO manner.

We want to process in the background. We want to use as modern Apex as we can so we’ll use Queueables. When an action is enqueued if there is no Queueable running we should write to the queue (an sObject) and start a Queueable. If there is a Queueable running we write to the queue and don’t start a Queueable. When a Queueable ends if there are unprocessed items it chains another Queueable. If there are no more items the chain ends.

There are multiple possible race conditions here. Multiple enqueues could happen at the same time. Only one should start the Queueable. Enqueue could happen just as a Queueable is ending. At this point the enqueue could think a Queueable is running and the Queueable might think there are no actions waiting. Resulting in items in the queue but no process. A Mutex can solve all this!

global class FIFOQueue {

// Enqueue a list of QueueItems__c (not defined here for brevity) and process FIFO

global void enqueue(String queueName, List<QueueItems__c> items) {

// All queue access must be protected

Mutex qMut = new Mutex(queueName+’queue’); // Intentionally let this throw if it times out

qMut.lock(TIMEOUT);

Mutex pMut = new Mutex(queueName+’_process’);

boolean startQueueable = false;

if (!pMut.isLocked) {

// This is the only place this Mutex gets locked and is protected by the above Mutex. Only 1 thread can be here so only 1 process can ever get started

pMut.lock(TIMEOUT);

startQueueable = true;

}

// All Mutex locking done. Can do DML now

insert items;

if (startQueueable) {

System.enqueueJob(new FIFOQueueRunner(), 0);

}

}

// Queueable inner class to execute the item

private class FIFOQueueRunner implements Queueable {

public void execute(QueueableContext context) {

Mutex qMut = new Mutex(queueName+’queue’); // Intentionally let this throw if it times out

Mutex pMut = new Mutex(queueName+’_process’);

pMut.extendLock();

qMut.lock(TIMEOUT);

// Read the items from the queue to process

qMut.unlock();

// Process the items however is necessary

qMut.lock(TIMEOUT);

// Update the processed items as necessary and read if there are more items to process

If (moreItemsToProcess) {

System.enqueueJob(new FIFOQueueRunner(), 0);

} else {

pMut.unlock();

}

qMut.unlock();

}

}

}This code is far from perfect. In the real world we’d probably use a Finalizer to do the update of the queueable items allowing for us to mark them as errors if necessary and enqueue again from the finalizer. However hopefully this gives an idea of how Mutexes could be used on the platform.

It is possible to build Mutexes out of sObjects, forUpdate SOQL and a lot of care (and no doubt quite a bit of luck at runtime). But they always feel like the luck might run out. Platform Mutexes would remove this fear. If you think Mutexes are a good idea vote for this Idea on Ideas Exchange.

The Need for Platform Mutexes

Why does Apex need Mutexes?

Apex is a Java like language but many of the things a Java developer would take for granted are missing. One of the biggest things I noticed when I came to the platform was the lack of a Thread class, Executors, Promises, async/await etc. As you work through the Apex Trailhead modules you eventually do come to async Apex based on Batch, Future and Queueable.

These are somewhat higher level abstractions than threads and the lack of any synchronisation mechanisms (beyond the database transaction ones) leads to the initial belief that these are not needed on the platform. However that is not the case. There are definitely cases where access to resources has to be limited to a single thread of execution.

It is also tempting to say that these challenges are only present when one of these methods for async programming is used. However that ignores the intrinsically multi-threaded nature of the platform. The platform runs on app servers that are multi-threaded. Each org is running on multiple app servers. So each org is capable of supporting many threads of execution. Multiple users may be performing actions at the same time, perhaps interacting with the same objects in parallel. It’s not hard to see how synchronous code in these cases may still result in race conditions that could result in problems.

An example might be a parent-child kind of object model where there is Apex code providing rollups from the children objects. A simple pattern would be to read the parent value, add the value from a new object and write it back to the parent. There is a clear race condition in this simple pattern when two users create children at the same time.

Generally we don’t see issues like this - it only happens with particular designs and implementation patterns. However once async programming is used limiting access to specific objects or resources (like API connections to external systems) may be required. On other platforms the normal way of doing this would be to use a mutex or a semaphore. Sometimes a mutex could be called a lock. Some languages even provide syntactic sugar to do this - Java has synchronised blocks for example.

Apex has no such primitives. Apex developers therefore end up trying to implement thee using tools the platform does provide. Generally this takes the form of a Lock sObject that is accessed using for update to hold database locks for the life of the transaction. As we’ll explore in the next blog post there are all sorts of gotchas with this. The least of which is that you have to use DML to acquire the lock. Which does not interact well with callouts.

What if Apex did provide a Mutex object? Well I raised an Idea to see if we could get this very thing implemented. I’d really appreciate if you could vote for this Idea.

What might this look like in code? Let’s take a look

public void lockedMethod() {

Mutex mut = new Mutex('my-name');

try{

mut.lock(1000);

// Do thread sensitive stuff

mut.unlock();

// Do more stuff that is not thread sensitive

} catch (MutexTimeoutExection e) {

System.debug('Mutex Timed Out')

}

}

As you can see this would offer developers an easy way to achieve single-threaded access to some resource protected by some sort of named Mutex. Timeouts would prevent waiting forever for the lock. The semantics of what is going on would have to be well defined. lock/unlock of a Mutex would have to happen at that point in the code - not at the end of the transaction like DML on sObjects. More than this, in this model, unlocking a Mutex would, in fact, have to commit any DML from within the mutex lock. Otherwise another thread could write to that object before this transaction commits.

A more platform native approach would be to not have the unlock method and simply release all locked Mutexes when the transaction ends. However this would prevent an important use case from being possible. Chains of Queueables (or Batches if you really want to) might be used to process data and the entire process should be under a single lock. This requires explicit lock/unlock without the Mutex being automatically unlocked at the end of the transaction

Hopefully this goes some way to justifying why locking may be required. In future posts we’ll look at implementing locks using sObjects and what a more fully fleshed out Mutex object could look like and the syntactic sugar this might enable to be added to Apex

Named Credentials vs Remote Site Services

Why use Named Credentials over the venerable Remote Site Settings? Read on to find out why I think you really should be using Named Credentials in all cases in new code.

In the last few posts in this mini series on Named Credentials I’ve been looking at the new form of Named Credentials and specifically the changes made in the Summer ‘23 release. But Named Credentials are not the only way to make callouts to external endpoints on the Salesforce platform. I was recently advising a team on some new functionality and they wanted to use Remote Site Settings. My strong recommendation was Named Credentials. Let’s explore why.

First of all why might a team (or individual) prefer Remote Site Settings? The first reason might simply be force of habit. Remote Site Settings have been around since API 19, Named Credentials since API 33 (both according to the Metadata API documentation). A lot of examples, including Trailhead, shows Remote Site Settings rather than Named Credentials. This alone hardly makes a compelling argument for Remote Site Settings. In fact it might favour the opposite argument - if Remote Site Settings were so good why were Named Credentials added?

The next argument for Remote Site Settings might be perceived complexity. For some reason Named Credentials are perceived as more complex. This is certainly true form an initial setup perspective - a Remote Site Setting is just a label and URI but a Named Credential can require a whole heap fo setup, authentication details and more. However once this is done for a Named Credential every callout using that Named Credential gets the authentication for free. With a Remote Site Setting you need to do all the authentication manually. This can be both complicated, especially with OAuth, and error prone. So strike two for Named Credentials

So now that we’ve looked at this from the side of Remote Site Settings what about from the Named Credentials side? The most obvious advantage is the authentication advantage already discussed above. In most cases this would be enough. But what about where no authentication is required? Well Named Credentials still offer advantages (assume we are talking about new-form Named Credentials):

Arbitrary data can be stored with the External Credential and used in custom headers that are automatically added to the request without Apex code

Named Credentials require permissioning to use via a Permission Set - Remote Site Settings allow any callout from any code by any user

Named Credentials can be locked to one or more namespaces - this can be particularly important for ISVs

Named Credentials effectively separating the business logic from endpoint URI

Let’s examine these advantages. Starting with the last. Separating the business logic from the endpoint URI is a much bigger advantage than it sounds. What happens when the URI changes? Do you want to have to find each and every callout to the endpoint and change them? What if you are an ISV and the endpoint varies for every customer instance? You can use Named Credentials or build your own Custom Setting and string replace in each and every callout. Seems like Named Credentials really win here.

Arbitrary data storage may be helpful in some cases. This is definitely not a strong win in every use case. We saw in a previous post how this data storage capability could be used to implement Basic Auth. It can also be used in other ways. Imagine you are implementing some sort of Jira integration to sell. Jira has two flavours, Cloud and OnPrem. These two flavours have slightly different APIs. You could store which flavour was in use on the Named Credential and switch automatically.

Permissioning should be a big deal for Admins. With a Remote Site Setting you are allowing any code running as any user to access that endpoint. This is a very powerful org-wide permission with no way to restrict it. This is so powerful including a Remote Site Setting in a managed package causes a confirmation to be required to install it. Named Credentials, at least new ones, require a permission set to be linked to the External Credential. This empowers the admin to control which users can run code that access the endpoint. Admins should probably push back harder than they do against packages containing Remote Site Settings

Named Credentials can be further locked down be locking them to a specific namespace. So a Named Credential in a managed package can be locked down just to the code in that namespace. This means that ISVs are not opening up access to arbitrary code. Admins should see this as a significant advantage.

We could envision an event driven integration architecture where Platform Events are fired whenever the integration needs to communicate off platform. The Apex trigger for these runs as a specific named user. The Named Credential is locked to the integration namespace and only this named user has the permission set assigned. This seems like an ideal situation from a security and control perspective

tl;dr There is no reason to favour Remote Site Settings and many reasons to favour Named Credentials. All new code should use Named Credentuals

Turning Off "Use Partitioned Domains" for the Data Integration Specialist Superbadge

Don’t make the same mistake I did and lose your in-progress Superbadge org!

As part of my “quest” for All Star Ranger status in Trailhead I was completing the Data Integration Specialist Superbadge recently. Nothing in the badge is particularly challenging but I did hit one slightly frustrating point that I thought would be worth pointing out. I’m not going to offer any solutions to the challenges in the Superbadge itself so if you’re looking for solutions this is not the blog for you!

The superbadge calls out that you (may) need to make a change to your org setup as shown below.

If you are like me you probably skip most of the setup text in Superbadges and jump straight to the challenges to see what you actually need to do. I don’t see a lot of value in the positioning story - it adds nothing to the challenge. So I missed this.

If you miss this you’ll be able to complete the first few challenges and will suddenly hit a wall where the test just fails. And then you’ll go back up and see this note. If this happens don’t do what I did and just rush into making the change in your org. If you do this you’ll not only disconnect the org from Trailhead but you’ll not be able to log back into it and you’ll have to create a new org and start again from the start. Annoying!

Turning off “Use partitioned domains” changes the domain. This will disconnect the org from Trailhead. So before you turn off and deploy the changes make sure you have the username and password for your org. You can then reconnect it to Trailhead and continue with the superbadge.

Good luck on the trail!

New Named Credentials and Basic Auth

Find out how to setup Basic Auth using New Named Credentials

“Legacy” Named Credentials support a whole host of authentication methods. “New” Named Credentials - not so much. One of the basic things missing is Basic Auth. Sure Basic Auth is pretty outdated and really not as secure as we’d like. But there are still plenty of end points out there that offer Basic Auth and it can be useful. So how can we use the new Named Credentials and still use Basic Auth?

Oh no! No Basic Auth!

Fortunately we can actually have basic auth without writing any code (although a little formula will be required).

Before we get into the configuration details what is Basic Auth? Basic Auth is a header passed on the HTTP request. It’ll look something like this

Authorization: Basic dXNlcm5hbWU6cGFzc3dvcmQ=This is the basic auth header for a username of username and a password word of password. It is generated base64 encoding the string <username>:<password> where <username> is the username and <password> is the password. Then prefix with Basic and that’s your header value. Pretty simple right?

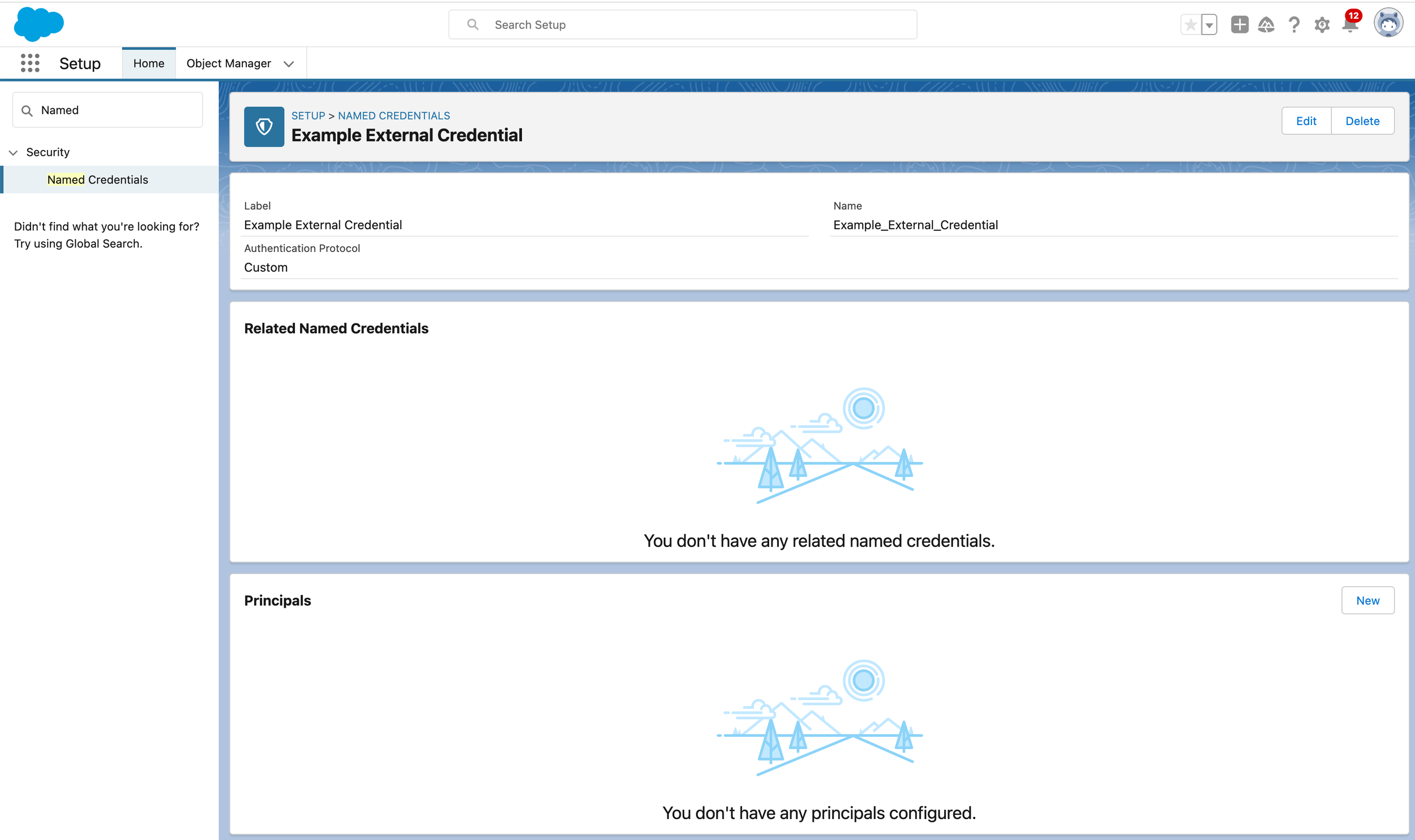

So let’s start by creating an External Credential and adding a Principal with a couple of parameters: one for the Username, one for the Password. Salesforce will protect these for us so we don’t have to worry about the encryption at rest etc - it’s handled for us

Now we can create a Custom Header that uses the parameters from the principal. This will use a formula to calculate the Base 64 encoded value discussed above. The formula is

{!'Basic ' & BASE64ENCODE(BLOB($Credential.BasicAuth.Username & ':' & $Credential.BasicAuth.Password))}Note that in this formula BasicAuth is the name of the External Credential, Username and Password are the parameter names. This is a bit fiddly! Maybe use the nice new methods discussed in the previous entry to automate the creation of this.

Save the custom header and then Create a Named Credential and add the External Credential to a Permission Set as normal

Summer '23 Named Credentials for Apex Developers

What do the Summer ‘23 changes to Named Credentials mean for Apex developers and what can you still not do from Apex?

In a previous post I discussed the changes made to Named Credentials in the Summer ‘23 release of Salesforce from an Admin point of view. In this post I’ll examine what this means for the Apex Developer.

Prior to the new form of Named Credentials being introduced in Winter ‘23 there was no “native “ way for Apex developers to interact with Named Credentials (beyond using them in callouts) - named credentials could not be created, modified or deleted from a native Apex API. It was possible to do this using the Metadata API. This involved making a callout and using the user session to do so. In recent months Salesforce has started rejecting managed packages that do this during the security review process. Alternative methods using connected apps and OAuth are possible but using the Metadata API to alter security related metadata could still cause a failure.

There are cases where managed packages may want to offer a guided setup experience which may include creating Named Credentials. In some cases these credentials could be packaged. However in some cases the endpoint host will be subscriber specific so a dynamically created Named Credential will be a better choice. How can an Apex developer continue to offer this?

In the Winter ‘23 release it was possible to create and manipulate a new format Named Credential that used an existing External Credential but not create the External Credential using a pure Apex API exposed in the ConnectAPI namespace.

ConnectAPI.CredentialInput input = new ConnectAPI.CredentialInput();

input.authenticationProtocol = 'OAuth';

input.credentials = new Map<String, ConnectAPI.CredentialValueInput>();

input.externalCredential = 'MyExternalCredential';

input.principalName = 'MyPrincipal';

input.principalType = 'NamedPrincipal';

ConnectAPI.createCredential(input);The developer name for the External Credential could be retrieved using getExternalCredentials to get a list of all credentials the user can authenticate to.

In Summer ‘23 it appears initially that the final parts of the puzzle are in place. We have the new createExternalCredential method to allow us to create a new external credential using data we gather from the user then create the Named Credential. So all is good!

But think back to the changes introduced in Summer ‘23. The link to the permission set is no longer part of the External Credential. It’s part of the permission set. So creating the External Credential and Named Credential is not enough: the permission set has to be updated. And there is no native Apex API for that. In fact it’s reasonable to assume that this new method is only being made available as it now does not allow Apex to alter the permissions of a user.

So in short you can automate the creation of a non-standard External Credential and Named Credential to automate setup for your users. But you cannot link it to a permission set so the Admin will still have to be prompted to take action

Named Credentials in Summer '23

What's changed with Named Credentials in the Summer '23 Salesforce release, why these changes have been made and what this means for Admins

In Winter ‘23 Salesforce introduced a new model for Named Credentials which split the endpoint definition from the authentication definition. The endpoint definition remains in the Named Credential with one, or more, External Credentials specifying the authentication details. Different External Credentials can be linked to different Permission Sets allowing control over which users have access to which authentication details. Furthermore the Named Credentials can be limited to certain namespaces.

At the same time some new Apex methods were made available to allow manipulation of certain parts of this setup from Apex without having to go through the Metadata API. Which is great but it did not cover all use cases, specifically it did not allow the creation of Named Credentials (although it did allow the creation of External Credentials). For many managed package use cases it’s desirable to create Named Credentials dynamically so the Metadata API was still required.

This small series of blog posts discusses changes and improvements in Summer ‘23 to both from the perspective of an admin using Setup and an Apex developer.

Changes in Summer ‘23

From an admin perspective the big changes in Summer ‘23 relate to the way that an External Credential is linked to a Permission Set. Prior to Summer ‘23 this was managed from the External Credential side. This was slightly odd. As an admin you were changing the access users had to a resource from somewhere other than the Permission Set (or Profile but that’s going away). Any user you assigned the Permission Set to would have access to the External Credential but you could not see that anywhere on the Permission Set. Which is odd.

When I first started exploring the changes in a Summer ‘23 org I was surprised by the changes and could not initially work out how to get a Named Credential working (having created it from Apex as discussed below).

This is because the Permission Set mappings have gone! Without a permission set mapping the Named Credential could not be used as there was no valid External Credential. A quick search around and I found the changes on the Permission Set page instead.

This places the control where you’d expect it as an admin: on the permission set. It’s easy to look at the permission set and see what that permission set is giving access too and somewhat harder to accidentally give users permission when you did not mean to.

This also means that External Credentials permissioning falls in line with the existing Named Credentials permissions on permission sets. This is for legacy Named Credentials so should be something admins are used to.

Any other changes you might have wanted to come to modern Named Credentials will have to wait for a future release. The biggest one from my point of view would be the addition of more authentication types. At least to cover all the ones that legacy Named Credentials can use. The new Named Credentials cannot even do Basic Auth (although this can be worked around as we’ll discuss in a later post)